动态规划中的巴拿赫不动点定理

- 5. Banach Fixed Point Theorem in Dynamic Programming

-

- 5.1 巴拿赫不动点定理定理 (Banach fixed point theorem)

-

-

- 定义 5.1 度量空间定义

- 定义 5.2 压缩映射

- Lemma 5.1 基本压缩不等式 (Fundamental contraction inequality)

- Corollary 5.1 压缩映射的固定点

- Proposition 5.1 柯西序列

- Theorem 5.1 巴拿赫不动点定理 (The Banach fixed-point theorem)

-

- 5.2 λ\lambdaλ-几何平均贝尔曼算子 (λ\lambdaλ-geometrically averaged Bellman operator)

-

-

- Proposition 5.2 mmm步的几何平均贝尔曼算子的压缩特性 (Contraction property of mmm-step geometrically averaged Bellman operator)

- Proposition 5.3 λ\lambdaλ几何平均贝尔曼算子的压缩性 (Contraction property of the λ\lambdaλ-geometrically averaged Bellman operator)

-

- 5.3 λ\lambdaλ-策略迭代算法 (λ\lambdaλ-Policy Iteration Algorithm)

-

-

- Lemma 5.2 λ\lambdaλ-级联的贝尔曼算子的单调性和恒定位移特性 (Monotonicity and constant shift of the λ\lambdaλ-cascaded Bellman operator)

- Proposition 5.4 λ\lambdaλ-级联的贝尔曼算子的压缩特性 (Contraction property of the λ\lambdaλ-cascaded Bellman operator)

- Proposition 5.5 最优λ\lambdaλ-PI算法的收敛性 (Convergence of Optimistic λ\lambdaλ-PI Algorithm)

-

- 5.4 实例

-

- Question 1: Optimistic λ\lambdaλ -Policy Iteration: E-Bus, extended

-

- Task 1

- Task 2

5. Banach Fixed Point Theorem in Dynamic Programming

如上两节所示,VI算法和PI算法都拥有各自的优势和劣势。具体来说,VI算法是一种简单的迭代算法,但它在总成本函数空间的收敛性方面可能是低效的。虽然PI算法在探索策略空间的有限性和在策略空间中达到更好的收敛性方面有一个很好的特性,但它仍然会受到精确策略评估的瓶颈影响。尽管OPI算法自然地连接了这两种算法,但它的性能是由有限策略评估的数量选择决定的。在本节中,我们旨在通过探索巴拿赫固定点定理的属性来缓解这种限制,并仍然保留简单和快速收敛的有希望的属性。

5.1 巴拿赫不动点定理定理 (Banach fixed point theorem)

首先,为了讨论的完整性,我们回顾一下最近提出的、证明压缩原理的另一种方法[19]。

定义 5.1 度量空间定义

度量空间是一有序对(X,d)(\mathcal{X}, d)(X,d)

(X,d)(\mathcal{X}, d)(X,d) 其中X\mathcal{X}X是一个集合, ddd 是一个在 X\mathcal{X}X的度量, 即, 一个函数d:X×X→Rd: \mathcal{X} \times \mathcal{X} \rightarrow \mathbb{R}d:X×X→R, 对于所有x,y,z∈Xx, y, z \in \mathcal{X}x,y,z∈X, 以下关系成立:

-

d(x,y)=0?x=yd(x, y)=0 \Longleftrightarrow x=yd(x,y)=0?x=y 不可分者同一性原理 (identity of indiscernibles),

-

d(x,y)=d(y,x)d(x, y)=d(y, x)d(x,y)=d(y,x) 对称性 (symmetry)

-

d(x,z)≤d(x,y)+d(y,z)d(x, z) \leq d(x, y)+d(y, z)d(x,z)≤d(x,y)+d(y,z) 三角不等式 (triangle inequality).

定义 5.2 压缩映射

令(X,d)(\mathcal{X}, d)(X,d)为一度量空间,映射关系 T:X→X\mathrm{T}: \mathcal{X} \rightarrow \mathcal{X}T:X→X被称为在X\mathcal{X}X上的压缩映射,如果存在γ∈[0,1)\gamma \in[0,1)γ∈[0,1), 使得

d(T(x),T(y))≤γd(x,y),for all x,y∈X(5.1)d(\mathrm{~T}(x), \mathrm{T}(y)) \leq \gamma d(x, y), \quad \text { for all } x, y \in \mathcal{X}\tag{5.1}d( T(x),T(y))≤γd(x,y), for all x,y∈X(5.1)

Lemma 5.1 基本压缩不等式 (Fundamental contraction inequality)

如果T:X→X\mathrm{T}: \mathcal{X} \rightarrow \mathcal{X}T:X→X 是一个压缩常数为γ\gammaγ的压缩映射,那么对于在 X\mathcal{X}X 的任意x,yx, yx,y

d(x,y)≤11?γ(d(x,T(x))+d(y,T(y)))(5.2)d(x, y) \leq \frac{1}{1-\gamma}(d(x, \mathrm{~T}(x))+d(y, \mathrm{~T}(y)))\tag{5.2}d(x,y)≤1?γ1?(d(x, T(x))+d(y, T(y)))(5.2)

Proof

根据三角不等式和T\mathrm{T}T是一个压缩映射的事实,我们有

d(x,y)≤d(x,T(x))+d(T(x),T(y))+d(T(y),y)≤d(x,T(x))+γd(x,y)+d(T(y),y)(5.3)\begin{aligned} d(x, y) & \leq d(x, \mathrm{~T}(x))+d(\mathrm{~T}(x), \mathrm{T}(y))+d(\mathrm{~T}(y), y) \\ & \leq d(x, \mathrm{~T}(x))+\gamma d(x, y)+d(\mathrm{~T}(y), y) \end{aligned}\tag{5.3}d(x,y)?≤d(x, T(x))+d( T(x),T(y))+d( T(y),y)≤d(x, T(x))+γd(x,y)+d( T(y),y)?(5.3)

即证。

Corollary 5.1 压缩映射的固定点

一个压缩映射T\mathrm{T}T最多有一个固定点。

Proof

让我们假设压缩映射 TTT有一个以上的固定点,例如xxx和yyy,d(x,y)=0.d(x, y)=0.d(x,y)=0. 根据T\mathrm{T}T的固定点定义,我们得到d(x,T(x))=0d(x, \mathrm{~T}(x))=0d(x, T(x))=0 和d(y,T(y))=0d(y, \mathrm{~T}(y))=0d(y, T(y))=0。根据Lemma 5.1,我们有d(x,y)=0.d(x, y)=0.d(x,y)=0. 通过矛盾法,即证。

Proposition 5.1 柯西序列

如果T\mathrm{T}T是一个压缩,那么对于任何x∈Xx\in \mathcal{X}x∈X,Tk(x)\mathrm{T}^{k}(x)Tk(x)下xxx的迭代序列T\mathrm{T}T是一个柯西序列 (Cauchy sequence)。

Proof

让x1=Tk(x)x_{1}=\mathrm{T}^{k}(x)x1?=Tk(x) 和x2=Tl(x).x_{2}=\mathrm{T}^{l}(x).x2?=Tl(x). 那么,根据基本压缩不等式,我们有

d(Tk(x),Tl(x))≤11?γ(d(Tk(x),Tk+1(x))+d(Tl(x),Tl+1(x)))≤γk+γl1?γ(d(x,T(x))+d(x,T(x)))(5.4)\begin{aligned} d\left(\mathrm{~T}^{k}(x), \mathrm{T}^{l}(x)\right) & \leq \frac{1}{1-\gamma}\left(d\left(\mathrm{~T}^{k}(x), \mathrm{T}^{k+1}(x)\right)+d\left(\mathrm{~T}^{l}(x), \mathrm{T}^{l+1}(x)\right)\right) \\ & \leq \frac{\gamma^{k}+\gamma^{l}}{1-\gamma}(d(x, \mathrm{~T}(x))+d(x, \mathrm{~T}(x))) \end{aligned}\tag{5.4}d( Tk(x),Tl(x))?≤1?γ1?(d( Tk(x),Tk+1(x))+d( Tl(x),Tl+1(x)))≤1?γγk+γl?(d(x, T(x))+d(x, T(x)))?(5.4)

显然,当kkk和lll趋于无穷大时,d(Tk(x),Tl(x))d\left(\mathrm{~T}^{k}(x), \mathrm{T}^{l}(x)\right)d( Tk(x),Tl(x))的距离会归于零。最后,我们准备提出经典的巴拿赫不动点定理(Banach fixed-point theorem)。

Theorem 5.1 巴拿赫不动点定理 (The Banach fixed-point theorem)

让(X,d)(\mathcal{X}, d)(X,d)是一个非空的完整度量空间,有一个压缩映射T\mathrm{T}T。那么T\mathrm{T}T就有一个唯一的固定点x?x^{*}x?。即 T(x?)=x?\mathrm{T}\left(x^{*}\right)=x^{*}T(x?)=x?。此外,x?x^{*}x?可以通过迭代来找到。

xn+1=T(xn),with arbitrary x0∈X(5.5)x_{n+1}=\mathrm{T}\left(x_{n}\right), \quad \text { with arbitrary } x_{0} \in \mathcal{X}\tag{5.5}xn+1?=T(xn?), with arbitrary x0?∈X(5.5)

也就是说,当n→∞n\rightarrow\inftyn→∞时,有xn→x?x_{n} \rightarrow x^{*}xn?→x? 。

Proof

直接的,我们有

d(Tk(x),x?)≤γk1?γd(x,T(x))(5.6)d\left(\mathrm{~T}^{k}(x), x^{*}\right) \leq \frac{\gamma^{k}}{1-\gamma} d(x, \mathrm{~T}(x))\tag{5.6}d( Tk(x),x?)≤1?γγk?d(x, T(x))(5.6)

即, k→∞k \rightarrow \inftyk→∞ 导致Tk(x)\mathrm{T}^{k}(x)Tk(x)收敛到 x?x^{*}x?.

5.2 λ\lambdaλ-几何平均贝尔曼算子 (λ\lambdaλ-geometrically averaged Bellman operator)

利用OPI算法的一个可能的方法是将各种OPI算法与不同步骤的策略评估集合起来。具体来说,我们可以根据Eq.(4.33)\mathrm{Eq}.(4.33)Eq.(4.33)中定义的mmm步骤贝尔曼算子的集合构建一个几何平均贝尔曼算子。

Tπ,λm:RK→RK,J?1∑i=1mλi?1∑i=1mλi?1TπiJ=1?λ1?λm∑i=1mλi?1TπiJ(5.7)\begin{aligned} \mathrm{T}_{\pi, \lambda}^{m}: \mathbb{R}^{K} \rightarrow \mathbb{R}^{K}, \quad J & \mapsto \frac{1}{\sum_{i=1}^{m} \lambda^{i-1}} \sum_{i=1}^{m} \lambda^{i-1} \mathrm{~T}_{\pi}^{i} J \\ &=\frac{1-\lambda}{1-\lambda^{m}} \sum_{i=1}^{m} \lambda^{i-1} \mathrm{~T}_{\pi}^{i} J \end{aligned}\tag{5.7}Tπ,λm?:RK→RK,J??∑i=1m?λi?11?i=1∑m?λi?1 Tπi?J=1?λm1?λ?i=1∑m?λi?1 Tπi?J?(5.7)

很容易看出,总成本函数JπJ_{\pi}Jπ?是所有mmm步贝尔曼算子的唯一固定点。它的压缩特性在下面的结果中得到了描述。

Proposition 5.2 mmm步的几何平均贝尔曼算子的压缩特性 (Contraction property of mmm-step geometrically averaged Bellman operator)

给定无限范围的MDP{X,U,p,g,γ}M D P\{\mathcal{X}, \mathcal{U}, p, g, \gamma\}MDP{ X,U,p,g,γ}, 令J,J′∈RKJ, J^{\prime} \in \mathbb{R}^{K}J,J′∈RK, m?m-m?步截断的、几何平均的贝尔曼算子是一个关于无穷范数的压缩映射,即:

∥Tπ,λmJ?Tπ,λmJ′∥∞≤γ(1?λmγm)(1?λ)(1?λγ)(1?λm)∥J?J′∥∞(5.8)\left\|\mathrm{T}_{\pi, \lambda}^{m} J-\mathrm{T}_{\pi, \lambda}^{m} J^{\prime}\right\|_{\infty} \leq \frac{\gamma\left(1-\lambda^{m} \gamma^{m}\right)(1-\lambda)}{(1-\lambda \gamma)\left(1-\lambda^{m}\right)}\left\|J-J^{\prime}\right\|_{\infty}\tag{5.8}∥∥?Tπ,λm?J?Tπ,λm?J′∥∥?∞?≤(1?λγ)(1?λm)γ(1?λmγm)(1?λ)?∥J?J′∥∞?(5.8)

Proof

根据无穷范数的三角不等式,可以得出

∥Tπ,λmJ?Tπ,λmJ′∥∞=1?λ1?λm∥∑i=1mλi?1(TπiJ?TπiJ′)∥∞≤1?λ1?λm(∑i=1mλi?1∥TπiJ?TπiJ′∥∞)≤1?λ1?λm(∑i=1mλi?1γi∥J?J′∥∞)≤γ(1?λ)(1?λmγm)(1?λγ)(1?λm)∥J?J′∥∞(5.9)\begin{aligned} \left\|\mathrm{T}_{\pi, \lambda}^{m} J-\mathrm{T}_{\pi, \lambda}^{m} J^{\prime}\right\|_{\infty} &=\frac{1-\lambda}{1-\lambda^{m}}\left\|\sum_{i=1}^{m} \lambda^{i-1}\left(\mathrm{~T}_{\pi}^{i} J-\mathrm{T}_{\pi}^{i} J^{\prime}\right)\right\|_{\infty} \\ & \leq \frac{1-\lambda}{1-\lambda^{m}}\left(\sum_{i=1}^{m} \lambda^{i-1}\left\|\mathrm{~T}_{\pi}^{i} J-\mathrm{T}_{\pi}^{i} J^{\prime}\right\|_{\infty}\right) \\ & \leq \frac{1-\lambda}{1-\lambda^{m}}\left(\sum_{i=1}^{m} \lambda^{i-1} \gamma^{i}\left\|J-J^{\prime}\right\|_{\infty}\right) \\ & \leq \frac{\gamma(1-\lambda)\left(1-\lambda^{m} \gamma^{m}\right)}{(1-\lambda \gamma)\left(1-\lambda^{m}\right)}\left\|J-J^{\prime}\right\|_{\infty} \end{aligned}\tag{5.9}∥∥?Tπ,λm?J?Tπ,λm?J′∥∥?∞??=1?λm1?λ?∥∥∥∥∥?i=1∑m?λi?1( Tπi?J?Tπi?J′)∥∥∥∥∥?∞?≤1?λm1?λ?(i=1∑m?λi?1∥∥? Tπi?J?Tπi?J′∥∥?∞?)≤1?λm1?λ?(i=1∑m?λi?1γi∥J?J′∥∞?)≤(1?λγ)(1?λm)γ(1?λ)(1?λmγm)?∥J?J′∥∞??(5.9)

由于压缩系数严格小于1,即证。

值得注意的是,当策略评估的数量mmm增加时,几何平均贝尔曼算子Tπ,λm\mathrm{T}_{\pi, \lambda}^{m}Tπ,λm?的压缩系数就会下降。换句话说,mmm的总成本越大,相关算法的收敛速度就越快。请注意,当mmm的总成本上升到无穷大时,这样的结构实际上是不现实的。然而,通过使mmm的总成本达到无穷大,很容易得出几何平均的贝尔曼算子为

Tπ,λ∞:RK→RK,J?(1?λ)∑i=1∞λi?1TπiJ(5.10)\mathrm{T}_{\pi, \lambda}^{\infty}: \mathbb{R}^{K} \rightarrow \mathbb{R}^{K}, \quad J \mapsto(1-\lambda) \sum_{i=1}^{\infty} \lambda^{i-1} \mathrm{~T}_{\pi}^{i} J\tag{5.10}Tπ,λ∞?:RK→RK,J?(1?λ)i=1∑∞?λi?1 Tπi?J(5.10)

在我们的Notes中被称为λ\lambdaλ几何平均贝尔曼算子。

Proposition 5.3 λ\lambdaλ几何平均贝尔曼算子的压缩性 (Contraction property of the λ\lambdaλ-geometrically averaged Bellman operator)

给定一个无限范围MDP{X,U,p,g,γ}M D P\{\mathcal{X}, \mathcal{U}, p, g, \gamma\}MDP{ X,U,p,g,γ},让J,J′∈RKJ, J^{\prime} \in \mathbb{R}^{K}J,J′∈RK,λ\lambdaλ几何平均贝尔曼算子是一个关于无穷范数的压缩映射,即

∥Tπ,λ∞J?Tπ,λ∞J′∥∞≤γ(1?λ)1?λγ∥J?J′∥∞(5.11)\left\|\mathrm{T}_{\pi, \lambda}^{\infty} J-\mathrm{T}_{\pi, \lambda}^{\infty} J^{\prime}\right\|_{\infty} \leq \frac{\gamma(1-\lambda)}{1-\lambda \gamma}\left\|J-J^{\prime}\right\|_{\infty}\tag{5.11}∥∥?Tπ,λ∞?J?Tπ,λ∞?J′∥∥?∞?≤1?λγγ(1?λ)?∥J?J′∥∞?(5.11)

Proof

这个结果来自于如命题5.2(eq. )中的将mmm推导到无穷大。

显然,λ\lambdaλ几何平均贝尔曼算子只有理论上的意义,没有实际用途。在下文中,我们研究了一个精心设计的贝尔曼算子,它最初是受λ\lambdaλ几何平均贝尔曼算子启发。它与RL背景下的 资格迹(eligibility trace) 概念的联系将在后面的章节中讨论。

5.3 λ\lambdaλ-策略迭代算法 (λ\lambdaλ-Policy Iteration Algorithm)

让J′∈RKJ^{\prime} \in \mathbb{R}^{K}J′∈RK成为总成本函数Jπ∈RKJ_{\pi} \in \mathbb{R}^{K}Jπ?∈RK的一个估计。我们定义以下映射

Tπ,J′(λ):RK→RK,J?(1?λ)TπJ′+λTπJ(5.12)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}: \mathbb{R}^{K} \rightarrow \mathbb{R}^{K}, \quad J \mapsto(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi} J\tag{5.12}Tπ,J′(λ)?:RK→RK,J?(1?λ)Tπ?J′+λTπ?J(5.12)

我们称其为称为λ\lambdaλ-级联贝尔曼算子(λ\lambdaλ-cascaded Bellman operator)。很容易看出,对于任何J1,J2∈RKJ_{1}, J_{2} \in \mathbb{R}^{K}J1?,J2?∈RK中,我们有

∥Tπ,J′(λ)J1?Tπ,J′(λ)J2∥∞≤λ∥TπJ1?TπJ2∥∞≤λγ∥J1?J2∥∞(5.13)\begin{aligned} \left\|\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{1}-\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{2}\right\|_{\infty} & \leq \lambda\left\|\mathrm{T}_{\pi} J_{1}-\mathrm{T}_{\pi} J_{2}\right\|_{\infty} \\ & \leq \lambda \gamma\left\|J_{1}-J_{2}\right\|_{\infty} \end{aligned}\tag{5.13}∥∥∥?Tπ,J′(λ)?J1??Tπ,J′(λ)?J2?∥∥∥?∞??≤λ∥Tπ?J1??Tπ?J2?∥∞?≤λγ∥J1??J2?∥∞??(5.13)

显然,λ\lambdaλ-级联贝尔曼算子是一个压缩,其模为λγ\lambda\gammaλγ,相对于无穷范数。也就是说,它有一个唯一的固定点。当λ=0\lambda=0λ=0时,Tπ,J′(0)\mathrm{T}_{\pi,{J^{\prime}}}^{(0)}Tπ,J′(0)?的固定点是TπJ′\mathrm{T}_{\pi} J^{\prime}Tπ?J′,这基本上是贝尔曼算子Tπ\mathrm{T}_{\pi}Tπ?对估计值J′J^{\prime}J′的一步应用。 当λ=1\lambda=1λ=1时,Tπ,J′(1)\mathrm{T}_{\pi, J^{\prime}}^{(1)}Tπ,J′(1)?的固定点满足以下等式

J=TπJ(5.14)J=\mathrm{T}_{\pi} J\tag{5.14}J=Tπ?J(5.14)

这确实是策略π\piπ的真实总成本函数,即JπJ_{\pi}Jπ?因此,公式(5.12)中定义的λ\lambdaλ-级联贝尔曼算子Tπ,J′(λ)T_{\pi, J^{\prime}}^{( \lambda)}Tπ,J′(λ)?的固定点可以被视为单步贝尔曼算子和无限步贝尔曼算子的凸组合。这样的关系与λ\lambdaλ-贝尔曼算子Tπ∞\mathrm{T}_{\pi}^{\infty}Tπ∞?的构造相吻合。

有趣的是,λ\lambdaλ-级联贝尔曼算子的单调性和恒定位移特性也都得到了保留。

Lemma 5.2 λ\lambdaλ-级联的贝尔曼算子的单调性和恒定位移特性 (Monotonicity and constant shift of the λ\lambdaλ-cascaded Bellman operator)

给定一个无限范围MDP{X,U,p,g,γ}M D P\{\mathcal{X}, \mathcal{U}, p, g, \gamma\}MDP{ X,U,p,g,γ},λ\lambdaλ-级联贝尔曼算子Tπ,J′(λ)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}Tπ,J′(λ)?同时具有单调性和恒移性。

(1) 对于所有x∈Xx \in \mathcal{X}x∈X,让J1,J2∈RKJ_{1}, J_{2} \in \mathbb{R}^{K}J1?,J2?∈RK 满足J1(x)≤J2(x)J_{1}(x)\leq J_{2}(x)J1?(x)≤J2?(x)。那么对于k=1,2,…k=1,2, \ldotsk=1,2,…,我们有

(Tπ,J′(λ))kJ1(x)≤(Tπ,J′(λ))kJ2(x)(5.15)\left(\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\right)^{k} J_{1}(x) \leq\left(\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\right)^{k} J_{2}(x)\tag{5.15}(Tπ,J′(λ)?)kJ1?(x)≤(Tπ,J′(λ)?)kJ2?(x)(5.15)

(2) 让J1,J2∈RKJ_{1}, J_{2} \in \mathbb{R}^{K}J1?,J2?∈RK 满足J2J_{2}J2?为J1J_{1}J1?的常数移位函数的构造,即对于所有x∈Xx \in \mathcal{X}x∈X有J2(x)=J1(x)+cJ_{2}(x)=J_{1}(x)+cJ2?(x)=J1?(x)+c。那么对于k=1,2,…k=1,2, \ldotsk=1,2,…,我们有

(Tπ,J′(λ))kJ2(x)=(Tπ,J′(λ))kJ1(x)+λkγkc(5.16)\left(\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\right)^{k} J_{2}(x)=\left(\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\right)^{k} J_{1}(x)+\lambda^{k} \gamma^{k} c\tag{5.16}(Tπ,J′(λ)?)kJ2?(x)=(Tπ,J′(λ)?)kJ1?(x)+λkγkc(5.16)

Proof

回忆定义 Tπ,J′(λ)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}Tπ,J′(λ)?, 我们有

Tπ,J′(λ)J1=(1?λ)TπJ′+λTπJ1≤(1?λ)TπJ′+λTπJ2=Tπ,J′(λ)J2(5.17)\begin{aligned} \mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{1} &=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi} J_{1} \\ & \leq(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi} J_{2} \\ &=\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{2} \end{aligned}\tag{5.17}Tπ,J′(λ)?J1??=(1?λ)Tπ?J′+λTπ?J1?≤(1?λ)Tπ?J′+λTπ?J2?=Tπ,J′(λ)?J2??(5.17)

其中不等式是由于标准贝尔曼算子 Tπ\mathrm{T}_{\pi}Tπ?的单调性,以及

Tπ,J′(λ)J2=(1?λ)TπJ′+λTπ(J1+c1)=(1?λ)TπJ′+λTπJ1+λγc1=Tπ,J′(λ)J1+λγc1(5.18)\begin{aligned} \mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{2} &=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi}\left(J_{1}+c \mathbf{1}\right) \\ &=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi} J_{1}+\lambda \gamma c \mathbf{1} \\ &=\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{1}+\lambda \gamma c \mathbf{1} \end{aligned}\tag{5.18}Tπ,J′(λ)?J2??=(1?λ)Tπ?J′+λTπ?(J1?+c1)=(1?λ)Tπ?J′+λTπ?J1?+λγc1=Tπ,J′(λ)?J1?+λγc1?(5.18)

这个结果简单的从一个归纳论证中得出。

Proposition 5.4 λ\lambdaλ-级联的贝尔曼算子的压缩特性 (Contraction property of the λ\lambdaλ-cascaded Bellman operator)

给定一个无限范围MDP{X,U,p,g,γ}M D P\{\mathcal{X}, \mathcal{U}, p, g, \gamma\}MDP{ X,U,p,g,γ},以及两个总成本函数估计值J1,J2∈RKJ_{1}, J_{2} \in \mathbb{R}^{K}J1?,J2?∈RK是有边界的。λ\lambdaλ-级联贝尔曼算子是一个关于无穷范数模为λγ\lambda \gammaλγ的压缩,即,

∥Tπ,J′(λ)J1?Tπ,J′(λ)J2∥∞≤λγ∥J1?J2∥∞(5.19)\left\|\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{1}-\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{2}\right\|_{\infty} \leq \lambda \gamma\left\|J_{1}-J_{2}\right\|_{\infty}\tag{5.19}∥∥∥?Tπ,J′(λ)?J1??Tπ,J′(λ)?J2?∥∥∥?∞?≤λγ∥J1??J2?∥∞?(5.19)

此外,λ\lambdaλ几何平均贝尔曼算子在J′J^{\prime}J′的一步评估是Tπ,J′(λ)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}Tπ,J′(λ)?的唯一固定点,即,

Tπ,J′(λ)(Tπ,λ∞J′)=Tπ,λ∞J′(5.20)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\left(\mathrm{T}_{\pi, \lambda}^{\infty} J^{\prime}\right)=\mathrm{T}_{\pi, \lambda}^{\infty} J^{\prime}\tag{5.20}Tπ,J′(λ)?(Tπ,λ∞?J′)=Tπ,λ∞?J′(5.20)

Proof

直接的有

∥Tπ,J′(λ)J1?Tπ,J′(λ)J2∥∞≤∥λTπJ1?λTπJ2∥∞≤λγ∥J1?J2∥∞(5.21)\begin{aligned} \left\|\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{1}-\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)} J_{2}\right\|_{\infty} & \leq\left\|\lambda \mathrm{T}_{\pi} J_{1}-\lambda \mathrm{T}_{\pi} J_{2}\right\|_{\infty} \\ & \leq \lambda \gamma\left\|J_{1}-J_{2}\right\|_{\infty} \end{aligned}\tag{5.21}∥∥∥?Tπ,J′(λ)?J1??Tπ,J′(λ)?J2?∥∥∥?∞??≤∥λTπ?J1??λTπ?J2?∥∞?≤λγ∥J1??J2?∥∞??(5.21)

其中简单地遵循贝尔曼算子的特性。将结果Tπ,λ∞J′\mathrm{T}_{\pi, \lambda}^{\infty} J^{\prime}Tπ,λ∞?J′ 代入到Tπ,J′\mathrm{T}_{\pi, J^{\prime}}Tπ,J′? 得到,

Tπ,J′(λ)(Tπ,λ∞J′)=Tπ,J′(λ)((1?λ)∑k=1∞λk?1TπkJ′)=(1?λ)TπJ′+λTπ((1?λ)∑k=1∞λk?1TπkJ′)=(1?λ)TπJ′+(1?λ)∑k=2∞λk?1TπkJ′=(1?λ)∑k=1∞λk?1TπkJ′(5.22)\begin{aligned} \mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\left(\mathrm{T}_{\pi, \lambda}^{\infty} J^{\prime}\right) &=\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}\left((1-\lambda) \sum_{k=1}^{\infty} \lambda^{k-1} \mathrm{~T}_{\pi}^{k} J^{\prime}\right) \\ &=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi}\left((1-\lambda) \sum_{k=1}^{\infty} \lambda^{k-1} \mathrm{~T}_{\pi}^{k} J^{\prime}\right) \\ &=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+(1-\lambda) \sum_{k=2}^{\infty} \lambda^{k-1} \mathrm{~T}_{\pi}^{k} J^{\prime} \\ &=(1-\lambda) \sum_{k=1}^{\infty} \lambda^{k-1} \mathrm{~T}_{\pi}^{k} J^{\prime} \end{aligned}\tag{5.22}Tπ,J′(λ)?(Tπ,λ∞?J′)?=Tπ,J′(λ)?((1?λ)k=1∑∞?λk?1 Tπk?J′)=(1?λ)Tπ?J′+λTπ?((1?λ)k=1∑∞?λk?1 Tπk?J′)=(1?λ)Tπ?J′+(1?λ)k=2∑∞?λk?1 Tπk?J′=(1?λ)k=1∑∞?λk?1 Tπk?J′?(5.22)

即证。

显然,λ\lambdaλ几何平均贝尔曼算子Tπ,λ∞\mathrm{T}_{\pi, \lambda}^{\infty}Tπ,λ∞?的评估可以通过找到λ\lambdaλ-级联贝尔曼算子Tπ,J′(λ)\mathrm{T}_{\pi, J^{\prime}}^{(\lambda)}Tπ,J′(λ)?的固定点直接计算,即

J=(1?λ)TπJ′+λTπJ(5.23)J=(1-\lambda) \mathrm{T}_{\pi} J^{\prime}+\lambda \mathrm{T}_{\pi} J\tag{5.23}J=(1?λ)Tπ?J′+λTπ?J(5.23)

在MDP的背景下,我们可以利用贝尔曼算子的紧凑表述的便利,如公式(4.25)(4.25)(4.25),这导致了固定点的闭式表达为

J=(IK?γλPπ)?1(Gπ+γ(1?λ)PπJ′)(5.24)J=\left(I_{K}-\gamma \lambda P_{\pi}\right)^{-1}\left(G_{\pi}+\gamma(1-\lambda) P_{\pi} J^{\prime}\right)\tag{5.24}J=(IK??γλPπ?)?1(Gπ?+γ(1?λ)Pπ?J′)(5.24)

通过采取与第4.4节中开发最优策略迭代相同的方法,我们构造了一个最优λ\lambdaλ策略迭代算法(Algorithm 4)。与证明Proposition 4.7的策略相同,可以得出以下关于最优λ\lambdaλ-PI算法的收敛性的结果

Proposition 5.5 最优λ\lambdaλ-PI算法的收敛性 (Convergence of Optimistic λ\lambdaλ-PI Algorithm)

给定一个无限范围MDP {X,U,p,g,γ}\{\mathcal{X}, \mathcal{U}, p, g, \gamma\}{ X,U,p,g,γ},让{Jk}\left\{J_{k}\right\}{ Jk?}和{πk}\left\{\pi_{k}\right\}{ πk?}是由最优λ\lambdaλ-PI算法生成的序列。然后JkJ_{k}Jk?收敛到J?J^{*}J?。此外,对于所有指数kkk大于某个指数κ\kappaκ,πk\pi_{k}πk?对于所有k>κk>\kappak>κ来说是最优的。

∥Jk+1?J?∥∞≤(γ(1?λ)(1?λmγm)1?λγ+λmγm)∥Jk?J?∥∞(5.25)\left\|J_{k+1}-J^{*}\right\|_{\infty} \leq\left(\frac{\gamma(1-\lambda)\left(1-\lambda^{m} \gamma^{m}\right)}{1-\lambda \gamma}+\lambda^{m} \gamma^{m}\right)\left\|J_{k}-J^{*}\right\|_{\infty}\tag{5.25}∥Jk+1??J?∥∞?≤(1?λγγ(1?λ)(1?λmγm)?+λmγm)∥Jk??J?∥∞?(5.25)

Proof

与简单OPI算法的证明类似,我们假设TgJ0≤J0\mathrm{T}_{\mathfrak{g}} J_{0} \leq J_{0}Tg?J0?≤J0?。应用策略改进,即Tπ0J0=TgJ0\mathrm{T}_{\pi_{0}} J_{0}=\mathrm{T}_{\mathfrak{g}} J_{0}Tπ0??J0?=Tg?J0?,意味着

Tπ0,J0(λ)J0=Tπ0J0=TgJ0≤J0(5.26)\mathrm{T}_{\pi_{0}, J_{0}}^{(\lambda)} J_{0}=\mathrm{T}_{\pi_{0}} J_{0}=\mathrm{T}_{\mathfrak{g}} J_{0} \leq J_{0}\tag{5.26}Tπ0?,J0?(λ)?J0?=Tπ0??J0?=Tg?J0?≤J0?(5.26)

让我们假设TπkJk≤Jk\mathrm{T}_{\pi_{k}} J_{k} \leq J_{k}Tπk??Jk?≤Jk?。根据公式(5.12)中λ\lambdaλ-级联贝尔曼算子的定义,我们有

Tπk,Jk(λ)Jk=TπkJk=TgJk≤Jk(5.27)\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)} J_{k}=\mathrm{T}_{\pi_{k}} J_{k}=\mathrm{T}_{\mathfrak{g}} J_{k} \leq J_{k}\tag{5.27}Tπk?,Jk?(λ)?Jk?=Tπk??Jk?=Tg?Jk?≤Jk?(5.27)

然后结构 Jk+1=(Tπk,Jk(λ))mkJkJ_{k+1}=\left(\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)}\right)^{m_{k}} J_{k}Jk+1?=(Tπk?,Jk?(λ)?)mk?Jk? 意味着 Jk+1≤JkJ_{k+1} \leq J_{k}Jk+1?≤Jk?。因此,我们有

Tπk+1Jk+1=TgJk+1≤TπkJk+1=(1?λ)TπkJk+1+λTπkJk+1≤(1?λ)TπkJk+λTπkJk+1=Tπk,Jk(λ)Jk+1=Tπk,Jk(λ)?(Tπk,Jk(λ))mkJk≤Jk+1,(5.28)\begin{aligned} \mathrm{T}_{\pi_{k+1}} J_{k+1} &=\mathrm{T}_{\mathfrak{g}} J_{k+1} \\ & \leq \mathrm{T}_{\pi_{k} J_{k+1}} \\ &=(1-\lambda) \mathrm{T}_{\pi_{k}} J_{k+1}+\lambda \mathrm{T}_{\pi_{k}} J_{k+1} \\ & \leq(1-\lambda) \mathrm{T}_{\pi_{k}} J_{k}+\lambda \mathrm{T}_{\pi_{k}} J_{k+1} \\ &=\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)} J_{k+1} \\ &=\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)} \circ\left(\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)}\right)^{m_{k}} J_{k} \\ & \leq J_{k+1}, \end{aligned}\tag{5.28}Tπk+1??Jk+1??=Tg?Jk+1?≤Tπk?Jk+1??=(1?λ)Tπk??Jk+1?+λTπk??Jk+1?≤(1?λ)Tπk??Jk?+λTπk??Jk+1?=Tπk?,Jk?(λ)?Jk+1?=Tπk?,Jk?(λ)??(Tπk?,Jk?(λ)?)mk?Jk?≤Jk+1?,?(5.28)

其中最后一个不等式由λ\lambdaλ-级联贝尔曼算子的单调性得出。简而言之,我们只需证明不等式

Tπk,Jk(λ)Jk=TπkJk=TgJk≤Jk(5.29)\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)} J_{k}=\mathrm{T}_{\pi_{k}} J_{k}=\mathrm{T}_{\mathfrak{g}} J_{k} \leq J_{k}\tag{5.29}Tπk?,Jk?(λ)?Jk?=Tπk??Jk?=Tg?Jk?≤Jk?(5.29)

对于由最优λ\lambdaλ策略迭代算法产生的所有JkJ_{k}Jk?序列都成立。最后,对于任何kkk,我们有

Jk+1=(Tπk,Jk(λ))mkJk≤TπkJk=TgJk(5.30)\begin{aligned} J_{k+1} &=\left(\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)}\right)^{m_{k}} J_{k} \\ & \leq \mathrm{T}_{\pi_{k}} J_{k} \\ &=\mathrm{T}_{\mathfrak{g}} J_{k} \end{aligned}\tag{5.30}Jk+1??=(Tπk?,Jk?(λ)?)mk?Jk?≤Tπk??Jk?=Tg?Jk??(5.30)

作为结果有,

J?≤Jk≤TgkJ0(5.31)J^{*} \leq J_{k} \leq \mathrm{T}_{\mathfrak{g}}^{k} J_{0}\tag{5.31}J?≤Jk?≤Tgk?J0?(5.31)

k→∞k\rightarrow\inftyk→∞导致的结果是JkJ_{k}Jk?在极限时收敛于J?J^{*}J?。 没有假设 TgJ0≤J0\mathrm{T}_{\mathfrak{g}} J_{0} \leq J_{0}Tg?J0?≤J0?的结果与证明命题4.7的思路相同。

对于所有步骤我们假设mk=mm_{k}=mmk?=m。由于Jk→J?J_{k} \rightarrow J^{*}Jk?→J?,因此,对于所有kkk大于某个指数κ\kappaκ,使πk\pi_{k}πk?为最优策略,即Tπk=Tg\mathrm{T}_{\pi_{k}}=\mathrm{T}_{\mathfrak{g}}Tπk??=Tg?。 因此,我们有

∥Jk+1?J?∥∞=∥(Tπk,Jk(λ))mJk?J?∥∞=∥(1?λ)∑i=1mλi?1TπkiJk+λmTπkmJk?TπkJ?∥∞≤(γ(1?λ)(1?λmγm)1?λγ+λmγm)∥Jk?J?∥∞(5.32)\begin{aligned} \left\|J_{k+1}-J^{*}\right\|_{\infty} &=\left\|\left(\mathrm{T}_{\pi_{k}, J_{k}}^{(\lambda)}\right)^{m} J_{k}-J^{*}\right\|_{\infty} \\ &=\left\|(1-\lambda) \sum_{i=1}^{m} \lambda^{i-1} \mathrm{~T}_{\pi_{k}}^{i} J_{k}+\lambda^{m} \mathrm{~T}_{\pi_{k}}^{m} J_{k}-\mathrm{T}_{\pi_{k}} J^{*}\right\|_{\infty} \\ & \leq\left(\frac{\gamma(1-\lambda)\left(1-\lambda^{m} \gamma^{m}\right)}{1-\lambda \gamma}+\lambda^{m} \gamma^{m}\right)\left\|J_{k}-J^{*}\right\|_{\infty} \end{aligned}\tag{5.32}∥Jk+1??J?∥∞??=∥∥∥?(Tπk?,Jk?(λ)?)mJk??J?∥∥∥?∞?=∥∥∥∥∥?(1?λ)i=1∑m?λi?1 Tπk?i?Jk?+λm Tπk?m?Jk??Tπk??J?∥∥∥∥∥?∞?≤(1?λγγ(1?λ)(1?λmγm)?+λmγm)∥Jk??J?∥∞??(5.32)

其中,不等式由无穷范数的三角不等式得出。结果即证。

Remark 5.1

当m=1m=1m=1时,该结果与基本VI算法的结果相吻合。当m→∞m\rightarrow\inftym→∞,对于所有kkk大于某个指数κ\kappaκ,使得πk\pi_{k}πk?是一个最优策略时,总成本函数的收敛特点为

∥Jk+1?J?∥∞≤γ(1?λ)1?λγ∥Jk?J?∥∞(5.33)\left\|J_{k+1}-J^{*}\right\|_{\infty} \leq \frac{\gamma(1-\lambda)}{1-\lambda \gamma}\left\|J_{k}-J^{*}\right\|_{\infty}\tag{5.33}∥Jk+1??J?∥∞?≤1?λγγ(1?λ)?∥Jk??J?∥∞?(5.33)

这与命题5.3中的λ\lambdaλ-几何平均贝尔曼算子的结果一致。

5.4 实例

Question 1: Optimistic λ\lambdaλ -Policy Iteration: E-Bus, extended

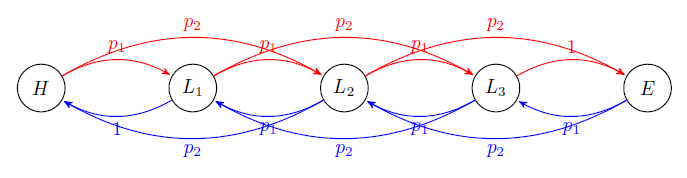

Consider a group of electric buses running round trips 24 hours a day. The task is to identify optimal operating actions at different battery states. The battery’s endurance and charging speed gradually decrease with the increase of battery life. Hence, for different buses, they have different transition probabilities between battery states. The following figure illustrates the state transitions between different states.

- Five states: HHH - high battery, EEE - empty battery, L1,L2,L3L_{1}, L_{2}, L_{3}L1?,L2?,L3? : three different low level battery statuses

- Two actions: SSS- continue to serve, C - charge

- Numbers on the edges refer to transition probabilities. p1=0.4,p2=0.6p_{1}=0.4, p_{2}=0.6p1?=0.4,p2?=0.6

- Discount factor γ=0.9\gamma=0.9γ=0.9 .

We choose the number of unserviced passengers as the local costs:

- In the high battery state, if it keeps the service, the unserviced passenger number is 0 .

- In all the low battery stats, if it keeps the service, the unserviced passenger number is 2 .

- In all the low battery state and empty battery, if it charges the battery, the unserviced passenger number is 5 .

Task 1

Implement one sweep of λ\lambdaλ -Policy Iteration Algorithm. Let us initialize total cost J0=0J_{0}=0J0?=0 and λ=0.8\lambda=0.8λ=0.8 .

(i) Generate a GIP π0\pi_{0}π0? from J0J_{0}J0? , and define its Bellman operator Tπ0\mathrm{T}_{\pi_{0}}Tπ0?? ;

(ii) Iterate the Bellman operator Tπ0\mathrm{T}_{\pi_{0}}Tπ0?? on J0J_{0}J0? till reaching the stopping criterion as ∥Ji+1?Ji∥∞<10?20\left\|J_{i+1}-J_{i}\right\|_{\infty}< 10^{-20}∥Ji+1??Ji?∥∞?<10?20 , where JiJ_{i}Ji? 's are evaluations of Tπ0\mathrm{T}_{\pi_{0}}Tπ0??

(iii) Iterate the \lambda -cascaded Bellman operator Tπ0,J0(λ)T_{\pi_{0}, J_{0}}^{(\lambda)}Tπ0?,J0?(λ)? on J0J_{0}J0? till reaching the same stopping criterion as in (ii);

(iv) Compare the results by task (ii) and (iii), as well as the number of iterations required till stopping.

import numpy as np

import matplotlib.pyplot as plt# transition probability

p1 = 0.4

p2 = 0.6# initial cost function

jh = jl1 = jl2 = jl3 = je = 0# cost of each state-action pair

ghs = 0

gl1s = gl2s = gl3s = 2

gec = gl3c = gl2c = gl1c = 5lmbd = 0.6

gamma = 0.9 # discount factordef T_pi(J_k, ul1, ul2, ul3):"""J_k: numpy array, total cost of five statesul1, ul2, ul3: str, 'S' or 'C'return: numpy array, updated total cost of five states"""jh, jl1, jl2, jl3, je = float(J_k[0]), float(J_k[1]), float(J_k[2]), float(J_k[3]), float(J_k[4])jh_ = p1 * (ghs + gamma * jl1) + p2 * (ghs + gamma * jl2) # Sif ul1 == 'S':jl1_ = p1 * (gl1s + gamma * jl2) + p2 * (gl1s + gamma * jl3)elif ul1 == 'C':jl1_ = gl1c + gamma * jhif ul2 == 'S':jl2_ = p1 * (gl2s + gamma * jl3) + p2 * (gl2s + gamma * je)elif ul2 == 'C':jl2_ = p1 * (gl2c + gamma * jl1) + p2 * (gl2c + gamma * jh)if ul3 == 'S':jl3_ = gl3s + gamma * jeelif ul3 == 'C':jl3_ = p1 * (gl3c + gamma * jl2) + p2 * (gl3c + gamma * jl1)je_ = p1 * (gec + gamma * jl3) + p2 * (gec + gamma * jl2) # Creturn np.array([jh_, jl1_, jl2_, jl3_, je_])# Q1: Generate GIP and its Bellman operator T_pi0

jl1_all = [p1 * (gl1s + gamma * jl2) + p2 * (gl1s + gamma * jl3), gl1c + gamma * jh]

ul1 = jl1_all.index(min(jl1_all))jl2_all = [p1 * (gl2s + gamma * jl3) + p2 * (gl2s + gamma * je),p1 * (gl2c + gamma * jl1) + p2 * (gl2c + gamma * jh)]

ul2 = jl2_all.index(min(jl2_all))jl3_all = [gl3s + gamma * je,p1 * (gl3c + gamma * jl2) + p2 * (gl3c + gamma * jl1)]

ul3 = jl3_all.index(min(jl3_all))Actions = ['S', 'C']

print('Q1:\tThe GIPs of L1, L2, L3 are {}, {}, {}'.format(Actions[ul1], Actions[ul2], Actions[ul3]))J_i = [0., 0., 0., 0., 0.]

T_pi0 = T_pi(J_i, Actions[ul1], Actions[ul2], Actions[ul3])

print('\t and its Bellman operator are {}'.format(T_pi0))# Q2 : the number of iterations of Bellman operator

Threshold = 1

i = 0

J_i_data = []

while Threshold > 1e-2:J_i_ = T_pi(J_i, Actions[ul1], Actions[ul2], Actions[ul3])Threshold = max(abs(J_i_ - J_i))J_i_data.append(J_i)J_i = J_i_i += 1print('\nQ2: After {} iterations the cost function J_i are {}'.format(i, J_i))# Q3: the number of iterations of lambda-cascade Bellman operator

Threshold = 1

k = 0

J_k = [0., 0., 0., 0., 0.]

J_0 = [0., 0., 0., 0., 0.]

J_k_data = []

while Threshold > 1e-2:J_k_ = (1 - lmbd) * T_pi(J_0, Actions[ul1], Actions[ul2], Actions[ul3]) \+ lmbd * (T_pi(J_k, Actions[ul1], Actions[ul2], Actions[ul3]))Threshold = max(abs(J_k_ - J_k))J_k_data.append(J_k)J_k = J_k_k += 1print('\nQ3: After {} iterations the cost function J_k are {}'.format(k, J_k))# Q4: compare the results and plot the results

J_i_error = np.max(np.array(abs(J_i_data - J_i)), axis=1)

J_k_error = np.max(np.array(abs(J_k_data - J_k)), axis=1)

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.set_xlabel('Iteration i')

ax.set_ylabel('$||J - J*||_{\infty}$')

ax.plot(J_i_error, marker='*', label='$PE$')

ax.plot(J_k_error, marker='.', label='$\lambda \ - \ PE$')

ax.legend()

ax.set_title('Threshlod = 0.01')

plt.show()Th outputs are:

Q1: The GIPs of L1, L2, L3 are S, S, Sand its Bellman operator are [0. 2. 2. 2. 5.]Q2: After 57 iterations the cost function J_i are [28.71067246 31.33441578 32.29354488 32.80920569 34.24159683]Q3: After 11 iterations the cost function J_k are [3.15423695 5.43385663 6.12451136 6.52698135 8.39038175]

Task 2

Implement and run the \lambda -Policy Iteration Algorithm till convergence to generate an optimal policy. Let us set the number of optimistic λ\lambdaλ -Policy evaluation step mk=5m_{k}=5mk?=5 .

# Task 2 : generate the optimal policy

print('\n-------The optimal policy---------\n')

J_k = np.array([0., 0., 0., 0., 0.])

for k in range(0, 5):# Policy Inprovementjh, jl1, jl2, jl3, je = J_kul1 = np.argmin([p1 * (gl1s + gamma * jl2) + p2 * (gl1s + gamma * jl3), gl1c + gamma * jh])ul2 = np.argmin([p1 * (gl2s + gamma * jl3) + p2 * (gl2s + gamma * je),p1 * (gl2c + gamma * jl1) + p2 * (gl2c + gamma * jh)])ul3 = np.argmin([gl3s + gamma * je,p1 * (gl3c + gamma * jl2) + p2 * (gl3c + gamma * jl1)])# Policy Evaluationfor m in range(5):J_k_ = (1 - lmbd) * T_pi(J_k, Actions[ul1], Actions[ul2], Actions[ul3]) \+ lmbd * (T_pi(J_k, Actions[ul1], Actions[ul2], Actions[ul3]))J_k = J_k_print('In {}-th step the optimal policy are in L1 is {}, in L2 is {}, ''in L3 is {}'.format(k, Actions[ul1], Actions[ul2], Actions[ul3]))

the outputs are

-------The optimal policy---------In 0-th step the optimal policy are in L1 is S, in L2 is S, in L3 is S

In 1-th step the optimal policy are in L1 is C, in L2 is C, in L3 is S

In 2-th step the optimal policy are in L1 is C, in L2 is C, in L3 is S

In 3-th step the optimal policy are in L1 is C, in L2 is C, in L3 is S

In 4-th step the optimal policy are in L1 is C, in L2 is C, in L3 is S