人脸识别最近几年的发展,几乎就两条,第一是面向移动设备,第二,改进损失函数,使训练的模型更加有效。这就要求loss能push各个类内在空间分布的更紧凑。从normface开始,人脸识别就进入使用余弦相似度来判断识别精度的时代。对weight和feature都进行l2 norm,避免长尾,使样本不均衡不再制约精度。

在center loss中,指明softMax-based loss将特征空间呈原点发散状。这样可以通过计算两个样本在特征空间的向量夹角大小进行人脸验证。夹脚过小则说明两个样本属于同一类别。后来的normface sphereface 这些工作,继承A-softmax, L2softMax, Am-softmax等工作,尽可能的将样本分在超球面上,同时拉开类间的夹角,使得类内更加紧凑。

接下来,我将简单介绍cosface和arcface各自的损失函数以及对应的pytorch代码。

CosFace

losscos=1Ni∑i?loges(cos(θyi,i)?m)es(cos(θyi,i)?m)+∑j≠yiescos(θj,i)loss_{cos}=\frac{1}{N_i} \sum_i -log\frac{e^{s(cos(\theta_{y_i,i})-m)}}{e^{s(cos(\theta_{y_i,i})-m)}+\sum_{j≠y_i}e^{scos(\theta_{j,i})}}losscos?=Ni?1?i∑??loges(cos(θyi?,i?)?m)+∑j?=yi??escos(θj,i?)es(cos(θyi?,i?)?m)?

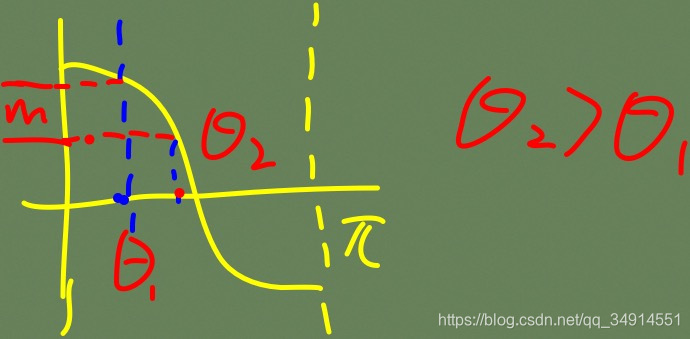

cosface loss的设计思路是通过在余弦空间上减去一个正值,使得在0-π\piπ这个单调区间上,cos(θyi,i)?mcos(\theta_{y_i,i})-mcos(θyi?,i?)?m更小,则等效θyi,i\theta_{y_i,i}θyi?,i?更大,说明样本与自己的类中心夹角过大。m的作用就是提高了样本的训练环境。待loss收敛时候,θ\thetaθ就会更加小,则类内更加聚拢。

代码解读

class AddMarginProduct(nn.Module):r"""Implement of large margin cosine distance: :Args:in_features: size of each input sampleout_features: size of each output samples: norm of input featurem: margincos(theta) - m"""def __init__(self, in_features, out_features, s=30.0, m=0.40):super(AddMarginProduct, self).__init__()self.in_features = in_featuresself。.out_features = out_featuresself.s = sself.m = mself.weight = Parameter(torch.FloatTensor(out_features, in_features))nn.init.xavier_uniform_(self.weight)def forward(self, input, label):# --------------------------- cos(theta) & phi(theta) ---------------------------cosine = F.linear(F.normalize(input), F.normalize(self.weight))phi = cosine - self.m# --------------------------- convert label to one-hot ---------------------------one_hot = torch.zeros(cosine.size(), device='cuda')# one_hot = one_hot.cuda() if cosine.is_cuda else one_hotone_hot.scatter_(1, label.view(-1, 1).long(), 1)# -------------torch.where(out_i = {x_i if condition_i else y_i) -------------output = (one_hot * phi) + ((1.0 - one_hot) * cosine)# you can use torch.where if your torch.__version__ is 0.4output *= self.s# print(output)return outputdef __repr__(self):return self.__class__.__name__ + '(' \+ 'in_features=' + str(self.in_features) \+ ', out_features=' + str(self.out_features) \+ ', s=' + str(self.s) \+ ', m=' + str(self.m) + ')'

直接看forward部分,先把feature和weight用l2 norm 归一化。然后两个矩阵相乘,就得到cos值了。因为范数为1了。

接下来减去m,然后通过onehot的label,挑选出需要减去m的样本,保留其他位置的cos值,最后乘一个s,返回,送入交叉墒中就可以了。

ArcFace

因为arcface和虹软的一款人脸识别重名了。

形式如下:

lossarc=1Ni∑i?loges(cos(θyi,i+m))es(cos(θyi,i+m))+∑j≠yies(cos(θj,i))loss_{arc}=\frac{1}{N_i}\sum_i -log\frac{e^{s(cos(\theta_{y_i,i}+m))}}{e^{s(cos(\theta_{y_i,i}+m))}+\sum_{j≠y_i} e^{s(cos(\theta_{j,i}))}}lossarc?=Ni?1?i∑??loges(cos(θyi?,i?+m))+∑j?=yi??es(cos(θj,i?))es(cos(θyi?,i?+m))?

arc loss的出发点是,从反余弦空间优化类间距离,通过在夹角上加个m,使得cos值在0-π\piπ单调区间上值更小。

代码解读

class ArcMarginProduct(nn.Module):r"""Implement of large margin arc distance: :Args:in_features: size of each input sampleout_features: size of each output samples: norm of input featurem: margincos(theta + m)"""def __init__(self, in_features, out_features, s=30.0, m=0.50, easy_margin=False):super(ArcMarginProduct, self).__init__()self.in_features = in_featuresself.out_features = out_featuresself.s = sself.m = mself.weight = Parameter(torch.FloatTensor(out_features, in_features))nn.init.xavier_uniform_(self.weight)self.easy_margin = easy_marginself.cos_m = math.cos(m)self.sin_m = math.sin(m)self.th = math.cos(math.pi - m)self.mm = math.sin(math.pi - m) * mdef forward(self, input, label):cosine = F.linear(F.normalize(input), F.normalize(self.weight))sine = torch.sqrt(1.0 - torch.pow(cosine, 2))# cos(a+b)=cos(a)*cos(b)-size(a)*sin(b)phi = cosine * self.cos_m - sine * self.sin_mif self.easy_margin:phi = torch.where(cosine > 0, phi, cosine)else:phi = torch.where(cosine > self.th, phi, cosine - self.mm)one_hot = torch.zeros(cosine.size(), device='cuda')one_hot.scatter_(1, label.view(-1, 1).long(), 1)output = (one_hot * phi) + ((1.0 - one_hot) * cosine)output *= self.sreturn output

这里m的是一个弧度值角度,实验设置为0.5

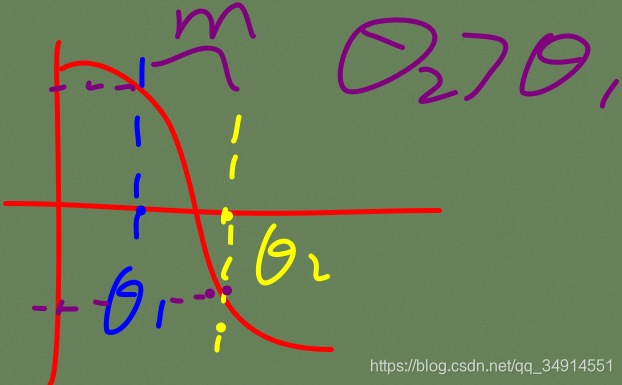

代码唯一造成困惑的地方可能就是easy margin那里,如果easy margin为true,只要夹角小于90度,这才再角度上加一个m。如果为false,以self.th为阈值,大家可以画下图,如果角度值theta小于m,在单调区间0-pi上,余弦值一定大于该阈值。所以th的作用就是保证了theta加m,依然在单调区间0-pi上。