本文的目的是记录这次毕业设计过程中为了实现语义分割部分所做的事情。我在这里不能提供一个全面详细的安装说明,只是写下自己在安装过程中的选择,参考资料和问题。

首先,我的电脑在三个月前安装了nvidia驱动,CUDA9.0以及cudnn7.5.

查看我的初始配置。

junbo@junbo:~$ nvcc -V

nvcc: NVIDIA ? Cuda compiler driver

Copyright ? 2005-2017 NVIDIA Corporation

Built on Fri_Sep__1_21:08:03_CDT_2017

Cuda compilation tools, release 9.0, V9.0.176

junbo@junbo:~$ cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

#define CUDNN_MAJOR 7

#define CUDNN_MINOR 5

#define CUDNN PATCHLEVEL 0

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#include “driver_types.h”

junbo@junbo:~$ cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 384.81 Sat Sep 2 02:43:11 PDT 2017

GCC version: gcc version 5.4.0 20160609 (Ubuntu 5.4.0-6ubuntu1~16.04.4)

junbo@junbo:~$ nvidia-smi

Tue Apr 9 11:44:44 2019

±----------------------------------------------------------------------------+

| NVIDIA-SMI 384.81 Driver Version: 384.81 |

|-------------------------------±---------------------±---------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|=++==============|

| 0 GeForce GTX 960M Off | 00000000:01:00.0 Off | N/A |

| N/A 44C P0 N/A / N/A | 0MiB / 2002MiB | 0% Default |

±------------------------------±---------------------±---------------------+

±----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

±----------------------------------------------------------------------------+

junbo@junbo:~$ gcc --version

gcc (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609

Copyright ? 2015 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

我的初步想法是配置一个支持cuda, cudnn的caffe, 这样在后续的过程中可以训练我自己的数据,也加快了分割速度。

由于SegNet需要支持cudnnv5版本的caffe,而cudnnv5.1是与CUDA8.0对应的,因此,我需要安装多版本的共存。

环境信息的对应信息:https://blog.csdn.net/zl535320706/article/details/83474849

Ubuntu16.04下安装多版本cuda和cudnn:https://blog.csdn.net/tunhuzhuang1836/article/details/79545625

安装多版本 cuda ,多版本之间切换:https://blog.csdn.net/maple2014/article/details/78574275

首先安装CUDA8.0,注意选择A2版本的.run文件,否则低版本的不支持Ubuntu16.04自带的gcc5.4,deb版本可能会重新安装显卡驱动

以下是安装的选择

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 375.26?

(y)es/(n)o/(q)uit: n

Install the CUDA 8.0 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-8.0 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: n

Install the CUDA 8.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/junbo ]: n

Samples location must be an absolute path

Enter CUDA Samples Location

[ default is /home/junbo ]:

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-8.0

Samples: Installed in /home/junbo

Please make sure that

- PATH includes /usr/local/cuda-8.0/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-8.0/lib64, or, add /usr/local/cuda-8.0/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run the uninstall script in /usr/local/cuda-8.0/bin

Please see CUDA_Installation_Guide_Linux.pdf in /usr/local/cuda-8.0/doc/pdf for detailed information on setting up CUDA.

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 361.00 is required for CUDA 8.0 functionality to work.

To install the driver using this installer, run the following command, replacing with the name of this run file:

sudo .run -silent -driver

Logfile is /tmp/cuda_install_6840.log

注意,这里安装完成后还需要将cuda添加到环境变量中,而且注意,现在做的是多版本共存,所以添加的路径应该是cuda而不是特指的某个cuda版本,见:https://www.jianshu.com/p/6a6fbce9073f

我的具体做法是:

在/.bashrc中添加

export PATH=/usr/local/cuda-8.0/bin:PATHexportLDLIBRARYPATH=/usr/local/cuda?8.0/lib64:PATH export LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64:PATHexportLDL?IBRARYP?ATH=/usr/local/cuda?8.0/lib64:LD_LIBRARY_PATH

以及修改etc/profile

export PATH=/usr/local/cuda-9.0/bin:PATHexportLDLIBRARYPATH=/usr/local/cuda?9.0/lib64PATH export LD_LIBRARY_PATH=/usr/local/cuda-9.0/lib64PATHexportLDL?IBRARYP?ATH=/usr/local/cuda?9.0/lib64LD_LIBRARY_PATH

变成

export PATH=/usr/local/cuda/bin:PATHexportLDLIBRARYPATH=/usr/local/cuda/lib64PATH export LD_LIBRARY_PATH=/usr/local/cuda/lib64PATHexportLDL?IBRARYP?ATH=/usr/local/cuda/lib64LD_LIBRARY_PATH

然后删除当前软连接,重新连接到8.0,见:https://blog.csdn.net/Maple2014/article/details/78574275

一下是运行8.0sample中的设备检测结果,用来检测是否安装成功

junbo@junbo:~/NVIDIA_CUDA-8.0_Samples/1_Utilities/deviceQuery$ sudo ./deviceQuery

[sudo] password for junbo:

./deviceQuery Starting…

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: “GeForce GTX 960M”

CUDA Driver Version / Runtime Version 9.0 / 8.0

CUDA Capability Major/Minor version number: 5.0

Total amount of global memory: 2003 MBytes (2100232192 bytes)

( 5) Multiprocessors, (128) CUDA Cores/MP: 640 CUDA Cores

GPU Max Clock rate: 1176 MHz (1.18 GHz)

Memory Clock rate: 2505 Mhz

Memory Bus Width: 128-bit

L2 Cache Size: 2097152 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536, 65536), 3D=(4096, 4096, 4096)

Maximum Layered 1D Texture Size, (num) layers 1D=(16384), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(16384, 16384), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 1 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 9.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = GeForce GTX 960M

Result = PASS

接下来安装cudnn5.1,为了做到对应,所以我这里安装的是对应版本的17年的cudnn5.1,而不是16年的5.0,地址:https://developer.nvidia.com/rdp/cudnn-archive

具体安装过程:https://blog.csdn.net/forever__1234/article/details/79844844

至此,完成CUDA8.0(2017版本)和cudnnv5.1(2017)的共存与使用路径的切换

这里有些问题,

1.之前的bashrc里面有三行

export LD_LIBRARY_PATH=/home/junbo/cudnn/cuda/lib64:LDLIBRARYPATHexportLDLIBRARYPATH=/usr/lib/x8664?linux?gnu:LD_LIBRARY_PATH export LD_LIBRARY_PATH=/usr/lib/x86_64-linux-gnu:LDL?IBRARYP?ATHexportLDL?IBRARYP?ATH=/usr/lib/x866?4?linux?gnu:LD_LIBRARY_PATH

export LD_LIBRARY_PATH=/lib/x86_64-linux-gnu:LDLIBRARYPATH这应该是上次安装的时候留下的然后在lcoatelibcudnn.so的时候,会找到在/usr/lib/x8664?linux?gnu中同样,在locatelibcuda.so的时候,会找到在/usr/lib/x8664?linux?gnu中在这几个目录下确实含有这些文件,但是按照网上的操作步骤应该是没有的,可能是安装zed驱动时候增加的支持,不过现在我不需要,所以注释掉了上面三行。只保留了:exportPATH=/usr/local/cuda/bin:LD_LIBRARY_PATH 这应该是上次安装的时候留下的 然后在lcoate libcudnn.so的时候,会找到在/usr/lib/x86_64-linux-gnu中 同样,在locate libcuda.so的时候,会找到在/usr/lib/x86_64-linux-gnu中 在这几个目录下确实含有这些文件,但是按照网上的操作步骤应该是没有的,可能是安装zed驱动时候增加的支持,不过现在我不需要,所以注释掉了上面三行。只保留了: export PATH=/usr/local/cuda/bin:LDL?IBRARYP?ATH这应该是上次安装的时候留下的然后在lcoatelibcudnn.so的时候,会找到在/usr/lib/x866?4?linux?gnu中同样,在locatelibcuda.so的时候,会找到在/usr/lib/x866?4?linux?gnu中在这几个目录下确实含有这些文件,但是按照网上的操作步骤应该是没有的,可能是安装zed驱动时候增加的支持,不过现在我不需要,所以注释掉了上面三行。只保留了:exportPATH=/usr/local/cuda/bin:PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

在重启电脑后发现,这时候再键入locate命令,就会按照预期,在cuda文件夹下找到这些共享库。

2.还有一个就是etc/profile文件夹下的路径,原先是写成cuda-9.0,现在都改成cuda,即

export PATH=/usr/local/cuda/bin:PATHexportLDLIBRARYPATH=/usr/local/cuda/lib64:PATH export LD_LIBRARY_PATH=/usr/local/cuda/lib64:PATHexportLDL?IBRARYP?ATH=/usr/local/cuda/lib64:LD_LIBRARY_PATH

3.按照之前的安装教程继续安装,make all&&make test都是正确执行,然后发现make runtest报错:

Cannot create Cublas handle. Cublas won’t be available.

按照这里的讨论:https://github.com/BVLC/caffe/issues/736

看来我的GPU确实有问题(在windows下装了好几遍驱动甚至重装系统后显卡都无法工作),我还是放弃了使用GPU的caffe,转而使用CPUonlycaffe

于是,修改Makefile.config,然后是还是make all&make test&&make runtest三步常规操作,没有问题,

接下来安装python接口,make pycaffe,没有报错,尝试python环境中使用caffe,即:

junbo@junbo:~/Seg/SegNet-Tutorial-master/caffe-segnet$ python

Python 2.7.12 (default, Nov 12 2018, 14:36:49)

[GCC 5.4.0 20160609] on linux2

Type “help”, “copyright”, “credits” or “license” for more information.

import caffe

然后这里报错:ImportError: No module named google.protobuf.internal

解决方法:sudo apt-get install python-protobuf ,见:https://blog.csdn.net/dongjuexk/article/details/78567717

没有pip的先安装pip,再次运行import caffe,没有报错

尝试运行caffe自带的mnist训练样例,能够正常训练,但是运行结束后会出现mumchunk的指针错误,这种错误一般是程序本身写的有问题,于是我忽略这个错误,直接进行下一步:使用segnet自带的webcam_demo.py,用训练好的模型对我自己的图片进行分割

这里要特别感谢实验室的学长,在浏览相关博客时偶然发现了他的文章(实际上是在他贴出来的代码里发现了我们实验室特有的文件路径名称XD)

https://blog.csdn.net/weixin_39078049/article/details/79564896

首先保证训练好的模型和参数文件都要放在对应的路径下,然后这里改动webcam_demo.py

特别注意:如果使用的是CPU版本的caffe,要将py文件中

caffe.set_mode_gpu()

改为

caffe.set_mode_cpu()

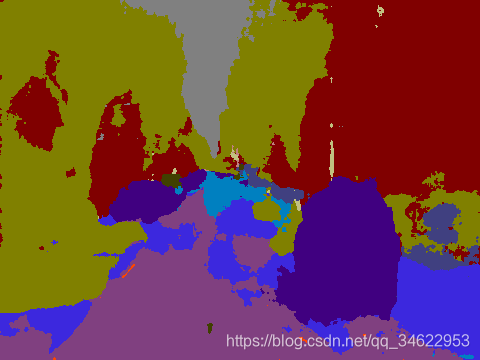

最后使用CPU分割KITTI00序列的第一张图片,如下:

注意:由于使用别人在480x360尺寸上训练出来的模型,所以我的图片在放到网络里的时候就已经被resize了,分割结果不理想也跟这个有关系。下一步是希望能够找到基于KITTI数据集训练出来的模型;或者是尝试例如DeepLab等新的语义分割方法。

注意:由于使用别人在480x360尺寸上训练出来的模型,所以我的图片在放到网络里的时候就已经被resize了,分割结果不理想也跟这个有关系。下一步是希望能够找到基于KITTI数据集训练出来的模型;或者是尝试例如DeepLab等新的语义分割方法。